The NVIDIA H100 GPU is Tensor Core, built on the revolutionary Hopper architecture, which represents a transformative leap in accelerated computing. Designed to meet the growing demands of AI, high-performance computing (HPC), and data analytics, the NVIDIA H100 GPU sets new performance, efficiency, and scalability standards. This article delves into the architectural innovations, features, applications, and implications of the NVIDIA H100 GPU, exploring how it is reshaping the landscape of computing.

Table of Contents

Architecture and Design

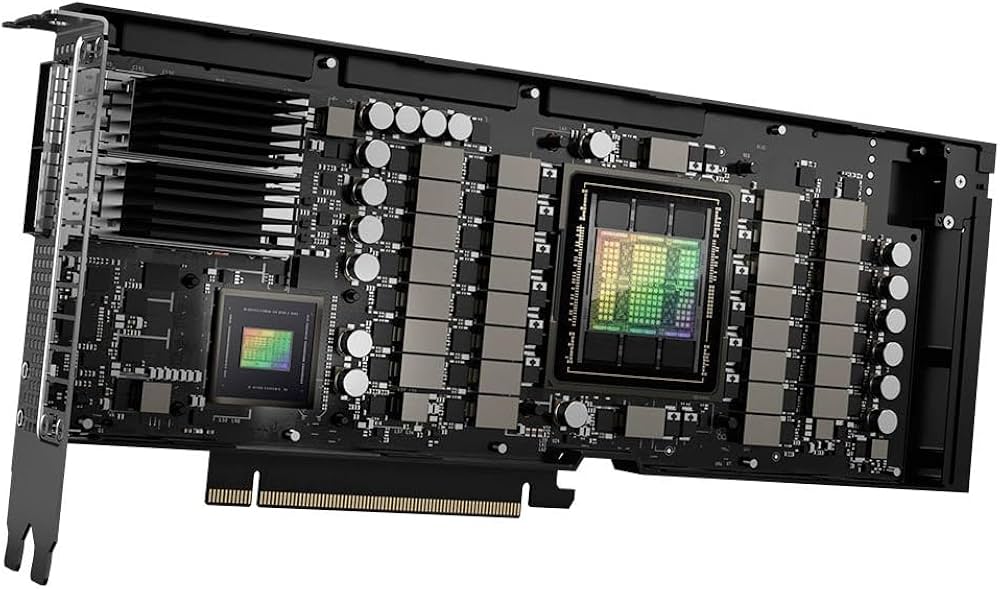

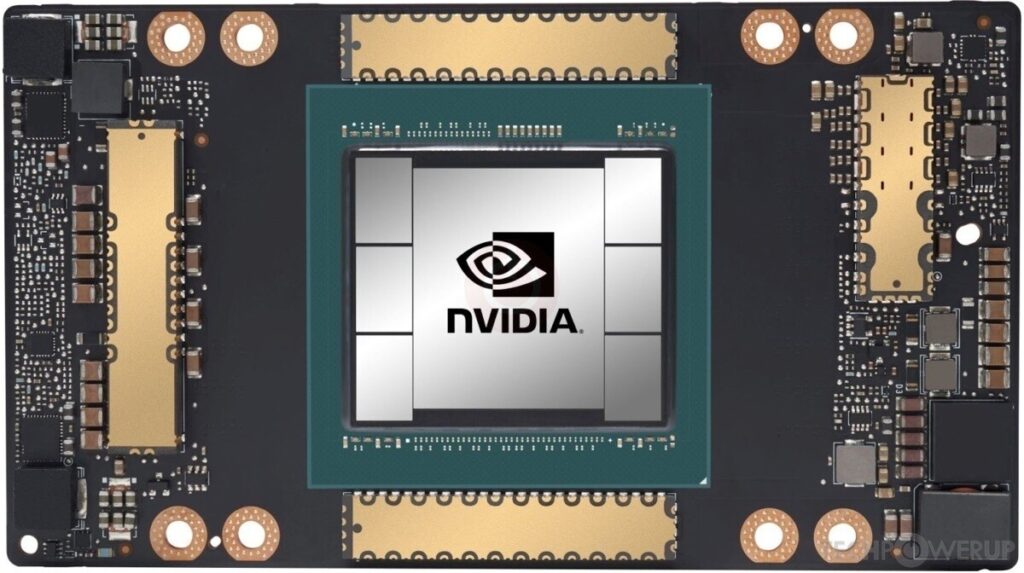

At the heart of the NVIDIA H100 GPU is the Hopper architecture, named after computing pioneer Grace Hopper. Fabricated using TSMC’s N4 process, the NVIDIA H100 GPU boasts an impressive 80 billion transistors, making it one of the most complex and powerful chips ever created. The GPU features up to 144 streaming multiprocessors (SMs), each optimized to deliver enhanced performance and energy efficiency.

A key innovation in the NVIDIA H100 GPU is the introduction of the Tensor Memory Accelerator (TMA). TMA enables bidirectional asynchronous memory transfers between shared and global memory, minimizing latency and maximizing throughput for complex workloads. This architectural enhancement is particularly beneficial for AI training and HPC tasks, where data movement is a critical bottleneck.

The Hopper architecture also incorporates enhanced dataflow optimization, enabling better utilization of computational resources. With improved dynamic load balancing and advanced scheduling, the NVIDIA H100 GPU ensures that computational tasks are executed with maximum efficiency.

Memory and Bandwidth

The NVIDIA H100 GPU comes equipped with up to 80 GB of HBM3 memory, delivering a staggering memory bandwidth of 3 TB/s. This represents a 50% increase over its predecessor, the A100, making it exceptionally well-suited for data-intensive applications such as large-scale AI models and scientific simulations.

The increased memory bandwidth is complemented by an expanded L2 cache, which significantly improves data access speeds. This combination of high-bandwidth memory and a large L2 cache ensures that the NVIDIA H100 GPU can handle even the most demanding workloads with ease.

Tensor Cores and Transformer Engine

The NVIDIA H100 GPU features fourth-generation Tensor Cores, which are designed to deliver up to six times the performance of the previous generation. These Tensor Cores introduce support for the FP8 data format, a significant innovation that doubles throughput compared to traditional FP16 or BF16 formats. The reduced precision of FP8, coupled with advanced error correction techniques, allows for faster computations without compromising accuracy.

Another groundbreaking feature of the NVIDIA H100 GPU is the Transformer Engine, specifically designed to optimize computations for transformer-based models. Transformers are the backbone of many modern AI applications, including natural language processing (NLP), computer vision, and recommendation systems. The Transformer Engine accelerates both training and inference, reducing time-to-solution for large models such as GPT-3 and GPT-4.

Performance and Scalability

The NVIDIA H100 GPU is engineered for unparalleled performance and scalability. In AI training, it delivers up to four times faster performance for large language models like GPT-3 (175 billion parameters) compared to the A100. This acceleration translates into significant cost savings and faster development cycles for AI applications.

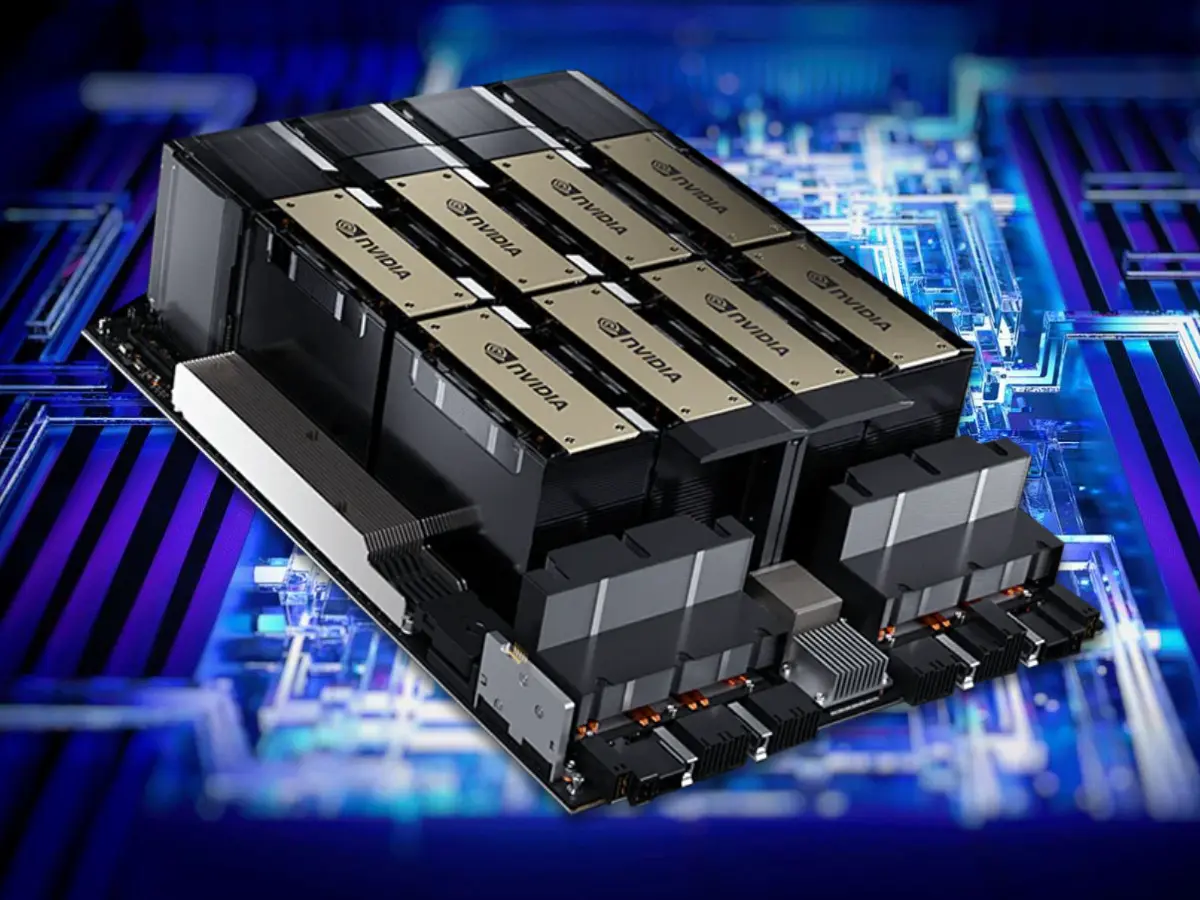

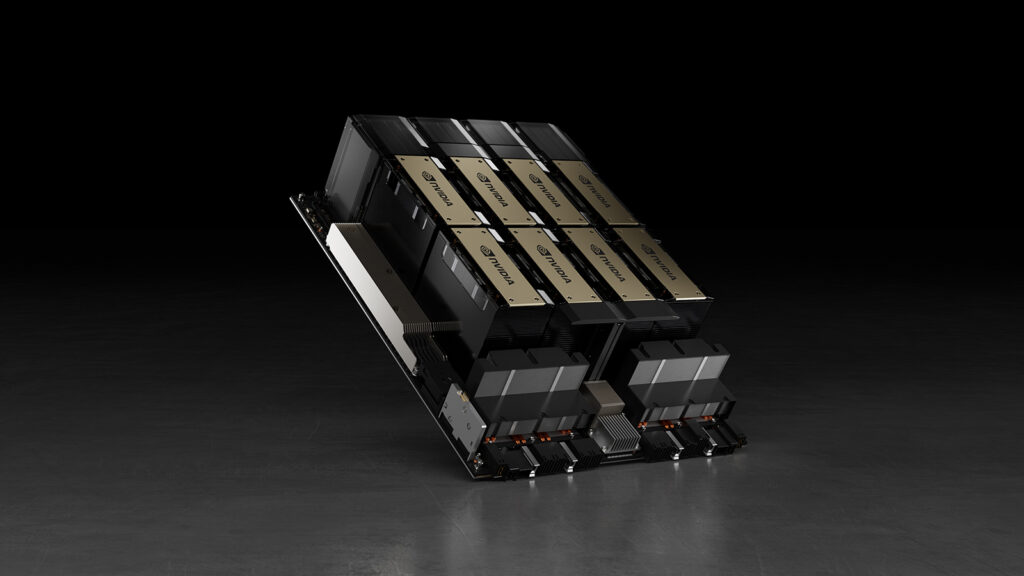

For scalability, the NVIDIA H100 GPU incorporates fourth-generation NVLink and PCIe Gen5 interconnects. These technologies enable seamless communication between GPUs, ensuring efficient scaling from individual systems to massive GPU clusters. NVIDIA’s Magnum IO software further enhances scalability by optimizing data movement across distributed systems, enabling the creation of AI supercomputers with thousands of GPUs.

The NVIDIA H100 GPU is also compatible with NVIDIA’s DGX H200 systems and the NVIDIA AI Enterprise software suite, providing a comprehensive ecosystem for deploying and managing AI workloads at scale.

Security and Reliability

In today’s data-driven world, security and reliability are paramount. The NVIDIA H100 GPU incorporates several features to address these concerns, including confidential computing capabilities. This allows sensitive data to remain encrypted during processing, protecting it from unauthorized access.

The NVIDIA H100 GPU also includes advanced error correction and fault tolerance mechanisms, ensuring reliable operation even under extreme workloads. These features make the NVIDIA H100 GPU an ideal choice for mission-critical applications in industries such as healthcare, finance, and autonomous systems.

You Find the Best Processor for Gaming in 2025? Click Here.

Applications

The NVIDIA H100 Tensor Core GPU is a versatile powerhouse with applications spanning multiple industries. Its capabilities are reshaping the way we approach AI, HPC, and data analytics.

1. Artificial Intelligence (AI)

The NVIDIA H100 GPU excels in AI training and inference, making it a cornerstone for developing next-generation AI models. Applications include:

- Natural Language Processing (NLP): Accelerates training for large language models, enabling breakthroughs in machine translation, sentiment analysis, and conversational AI.

- Computer Vision: Powers real-time object detection, image classification, and video analytics.

- Generative AI: Supports applications such as content creation, drug discovery, and design optimization.

2. High-Performance Computing (HPC)

In HPC, the NVIDIA H100 GPU’s computational power is unlocking new possibilities in scientific research and engineering:

- Climate Modeling: Enhances the accuracy and speed of simulations for predicting climate change.

- Genomics: Accelerates DNA sequencing and analysis, paving the way for personalized medicine.

- Astrophysics: Enables simulations of cosmic phenomena at unprecedented scales.

3. Data Analytics

The NVIDIA H100 GPU’s high memory bandwidth and computational capabilities make it ideal for processing massive datasets. Industries such as finance, retail, and telecommunications are leveraging the NVIDIA H100 GPU for:

- Real-time fraud detection.

- Customer behavior analysis.

- Predictive maintenance and supply chain optimization.

4. Enterprise AI Solutions

For enterprises, the NVIDIA H100 GPU facilitates the deployment of AI-driven applications that improve decision-making and operational efficiency. Examples include:

- Healthcare: Supports diagnostic imaging, treatment planning, and drug development.

- Finance: Enhances risk assessment, portfolio optimization, and algorithmic trading.

- Autonomous Systems: Powers self-driving cars, drones, and robotics.

Ecosystem and Software Support

The NVIDIA H100 GPU is part of NVIDIA’s comprehensive AI ecosystem, which includes hardware, software, and development tools. Key components of this ecosystem include:

- NVIDIA CUDA: Provides developers with the tools to harness the full power of the NVIDIA H200 GPU.

- NVIDIA Triton Inference Server: Simplifies the deployment of AI models in production environments.

- NVIDIA AI Enterprise: Offers an end-to-end software suite for building and deploying AI applications.

- NVIDIA Omniverse: Facilitates collaborative 3D design and simulation, leveraging the NVIDIA H100 GPU’s computational power.

Environmental Impact

As the demand for computational power grows, so does the need for energy-efficient solutions. The NVIDIA H100 GPU is designed with sustainability in mind, delivering higher performance per watt compared to previous generations. This efficiency reduces the environmental footprint of data centers, making the NVIDIA H100 GPU a more sustainable choice for organizations.

NVIDIA is also committed to advancing green computing through initiatives such as liquid cooling and renewable energy integration, further enhancing the environmental impact of its technologies.

Competitive Landscape

In the competitive landscape of GPUs, the NVIDIA H100 GPU stands out for its combination of performance, efficiency, and versatility. While competitors such as AMD and Intel have made strides in GPU technology, the NVIDIA H100 GPU’s architectural innovations and ecosystem support give it a distinct edge.

Key differentiators include:

- Superior AI performance, driven by the Transformer Engine and FP8 support.

- Unmatched scalability, enabled by NVLink and Magnum IO.

- Comprehensive software support, ensuring seamless integration with existing workflows.

Challenges and Future Directions

Despite its groundbreaking capabilities, the NVIDIA H100 GPU faces challenges such as high cost and power consumption, which may limit its adoption in smaller organizations. NVIDIA is addressing these challenges through initiatives such as cloud-based GPU access, which allows users to leverage the NVIDIA H100 GPU’s power without upfront hardware investments.

Looking ahead, NVIDIA will likely continue pushing the boundaries of GPU technology. Future developments may include even greater integration of AI-specific hardware, enhanced energy efficiency, and expanded support for emerging workloads such as quantum computing.

Conclusion

The NVIDIA H100 Tensor Core GPU is more than just a technological marvel; it is a catalyst for innovation across industries. By combining unparalleled performance with architectural advancements and ecosystem support, the NVIDIA H100 GPU is enabling organizations to tackle complex challenges and unlock new opportunities.

As AI and HPC continue to reshape the world, the NVIDIA H100 GPU is a testament to NVIDIA’s commitment to driving progress in accelerated computing. Whether in scientific research, enterprise applications, or AI development, the NVIDIA H100 GPU is poised to play a pivotal role in shaping the future of technology.

Comments (1)

Dena Kaffkasays:

January 2, 2025 at 7:22 amWell I definitely liked reading it. This tip procured by you is very practical for good planning.